Linhas de Pesquisa

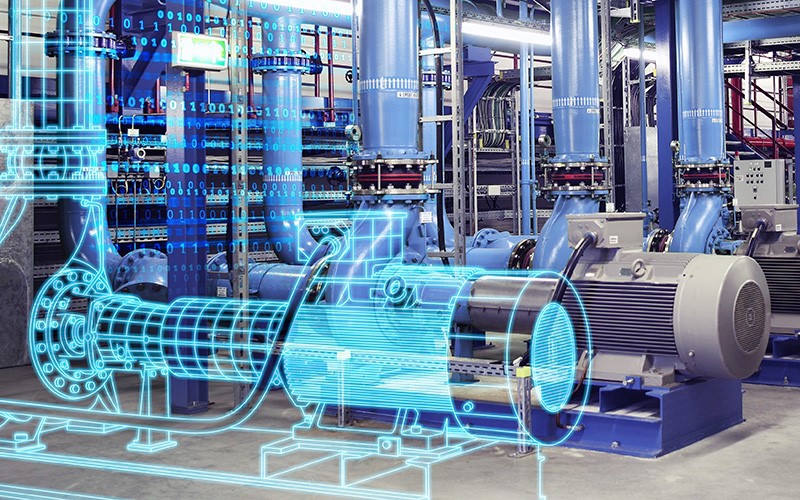

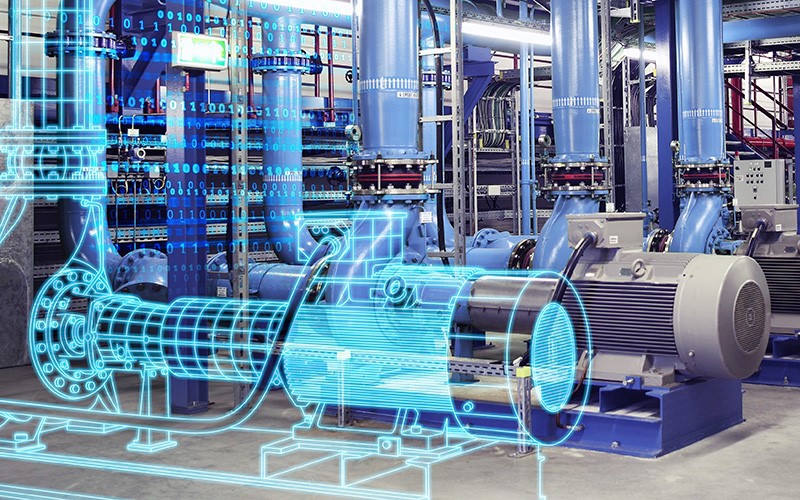

Digital Twin

Entende-se que o conceito de Digital Twin foi antecipado na obra de David Gelernter (1991) intitulado “Mirror Worlds” e que posteriormente foi circunstanciado em publicações como de Grieves (2014) em especial para apresentações sobre Product Lifecycle Management (PLM). Nessa visão inicial, e de forma bastante genérica, Digital Twin consiste numa associação entre um produto real e seu equivalente virtual (LIM; ZHENG; CHEN, 2019). Desde então, o conceito de Digital Twin tem evoluído na direção de combinar, de forma sinérgica, pelo menos três elementos: o(s) modelo(s) executável(eis) de um sistema/produto, um conjunto de dados relacionados a este sistema/produto, alguma forma de atualizar/ajustar o modelo a partir dos dados do mundo real (WRIGHT; DAVIDSON, 2020). Outra característica recorrente é a relação de um Digital Twin com o ciclo de vida do sistema/produto ao qual ele se refere, incluindo os processos envolvidos.

Dentro do ciclo de vida de um sistema/produto, o conceito de Digital Twin pode ser aplicado:

-

Na fase de projeto, tendo uma sobreposição muito grande com o protótipo virtual do sistema/produto, utilizado em experimentações para aprimorar e propiciar um melhor entendimento sobre o sistema/produto e processos envolvidos;

-

Durante a operação, onde se recebe dados e informações do sistema/produto real, que podem ser utilizados seja para fins de monitoração e manutenção do sistema real, seja em busca de uma otimização e aprimoramento contínuo do sistema/produto real.

Considerando o exposto, o escopo desta linha de pesquisa é investigar como técnicas e métodos de IA podem contribuir para o desenvolvimento de Digital Twins para ambientes industriais. Entre as questões e desafios a serem investigados destacam-se:

-

Como sistematizar a introdução do conceito e o desenvolvimento de Digital Twins em diferentes ambientes industriais e com diferentes níveis de abrangência, envolvendo desde a concepção do modelo, ao planejamento da infraestrutura para coleta de dados, a estruturação de bancos de dados, entre outros;

-

Como explorar o uso de técnicas de IA inicialmente na concepção do modelo de um Digital Twin e posteriormente no seu aprimoramento contínuo ao longo do ciclo de vida do produto/processo;

-

Como realizar a validação de um sistema Digital Twin baseado em IA considerando, em particular, o caso de sistemas críticos sob o ponto de vista de segurança (safety e security);

-

Quais os desafios e limitações tecnológicas para introdução do conceito de Digital Twin em ambientes industriais, isto é, quais os requisitos mínimos relacionados a infraestrutura de rede, conectividade dos equipamentos, sensoriamento do sistema, entre outros;

-

Como explorar a integração com outras tecnologias habilitadoras da Indústria 4.0, tais como robótica, realidade estendida, entre outros;

-

Como tratar a questão de fidelidade/representatividade dos dados para sistemas em concepção (por exemplo, utilizando dados gerados por outros sistemas, protótipos ou de modelos);

-

Como tratar a heterogeneidade dos dados (em formato, qualidade) para sistema legados, bem como a interpretação e classificação dos dados;

-

Como garantir a robustez e adaptabilidade das soluções desenvolvidas;

-

Como implementar diferentes formas de feedback para o sistema real.

Para lidar com estas questões e desafios, as soluções devem se apoiar nos seguintes pilares da Indústria 4.0:

-

Simulação - simulação é a base do Digital Twin, e desta forma os modelos podem ser aprimorados com o uso da IA, bem como os modelos de IA podem ser aprimorados pelos dados gerados pelo Digital Twin, sem gerar perturbações nos sistemas reais;

-

Internet das coisas (IoT) - na Indústria 4.0 o que diferencia o Digital Twin de um modelo tradicional é exatamente ser alimentado com dados reais, em tempo real, de forma que possa espelhar o sistema real a cada instante;

-

Big Data & Analytics - este é o pilar mais afim à IA, pois é responsável pelas análises que devem ser feitas com os dados continuamente coletados do sistema real.

Entre os impactos benéficos esperados estão:

-

a redução do ciclo de desenvolvimento de produto/processo, resultante da antecipação de problemas durante a fase de projeto e implementação;

-

o monitoramento em tempo real do produto/processo permitindo a antecipação de problemas e o aprimoramento de processos durante a operação do sistema;

-

a possibilidade de experimentação com o produto/processo de forma simulada, sem gerar perturbações no sistema real;

-

maior robustez, propiciada pela análise de cenários onde se considera a ocorrência de falhas e eventos inesperados;

-

maior autonomia e adaptabilidade do sistema propiciada pela capacidade de ‘aprender’ a partir dos dados coletados.

Referências